XBRL for ABS Pitfalls

XBRL for ABS: A Technical Guide to Common Pitfalls and Fixes

Structured finance runs on precision, yet the SEC's XBRL mandate for Asset-Backed Securities (ABS) introduced a minefield of potential data integrity issues. For analysts, data engineers, and quants, a seemingly minor tagging error can cascade into flawed risk models, broken surveillance systems, and unreliable investment conclusions. This article moves beyond generic warnings to dissect the most critical challenges in XBRL for ABS — common pitfalls that compromise data lineage and programmatic analysis. Understanding these specific, technical failures is the first step toward building truly reproducible and trustworthy financial analytics for remittance data and deal monitoring. These are not theoretical problems; they are recurring data quality gaps found in live filings that directly impact everything from credit risk assessment to AI-driven market analysis. Visualizing this data lineage on platforms like Dealcharts makes the context behind every number transparent and auditable.

Market Context: Why XBRL Data Integrity Matters for ABS

The SEC's mandate for structured data in ABS filings (Regulation AB II) was intended to increase transparency and enable investors to perform more robust, data-driven analysis. By requiring machine-readable disclosures, regulators aimed to make the complex cash flows and collateral characteristics of ABS more accessible. However, the technical complexity of the XBRL standard, combined with the diversity of deal structures, has created significant challenges.

Common pitfalls, such as misclassifying deal structures or using incorrect temporal contexts, directly undermine this goal. When an XBRL filing contains errors, it forces data consumers—analysts, rating agencies, and fintech platforms—to revert to manual parsing of HTML or PDF documents. This not only defeats the purpose of structured data but also introduces costly delays and the potential for human error. For programmatic users, these inconsistencies break data ingestion pipelines and corrupt historical datasets, making reliable time-series analysis impossible. The result is a market where the promised efficiency of structured data remains partially unrealized due to persistent data quality issues.

The Data Source: From EDGAR Filings to Parsed Analytics

The primary source for all ABS performance data is the SEC's EDGAR database, specifically through filings like Form 10-D (monthly remittance reports) and 424B5 (prospectus supplements). These documents contain the raw, inline XBRL (iXBRL) that structures the numerical and categorical data.

For a data engineer or quantitative analyst, the workflow is:

- Access: Retrieve the filings programmatically via the EDGAR API or bulk downloads.

- Parse: Use an XBRL parser (like Arelle in Python or commercial libraries) to extract the facts, contexts, and taxonomies from the iXBRL instance.

- Link: Connect the extracted data to other datasets by linking the filing's Central Index Key (CIK) to deal identifiers like CUSIPs or ticker symbols.

This process is where the pitfalls become apparent. A parser will ingest what is filed, regardless of whether it is logically correct. For example, if a filer misuses a context period, the parser will faithfully extract a nonsensical data point. This is why robust post-processing validation is critical. The Dealcharts API provides access to pre-parsed and validated data from these filings, linking CIKs to deals, shelves, and tranches to help bypass these initial parsing hurdles.

Example Workflow: Programmatic Validation of Context Periods

A common error is confusing "instant" and "duration" contexts. An "instant" context is for a point-in-time value (e.g.,

as ofendingBalance

), while a "duration" context is for a value over a period (e.g.,2024-01-31

forprincipalLosses

to2024-01-01

). Here is a simplified Python snippet demonstrating how to validate this, showcasing data lineage from source to insight.2024-01-31

## Shows data lineage: EDGAR Filing -> XBRL Parse -> Context Validation -> Insight#import arelle.Cntlrdef validate_context_usage(xbrl_file_path):"""Parses an XBRL filing and checks for common context misuse.This demonstrates the principle of verifying data lineage."""# 1. Source: Load the XBRL filing from a local path or URLcntlr = arelle.Cntlr.Cntlr()model_xbrl = cntlr.modelManager.load(xbrl_file_path)insights = []# 2. Transform: Iterate through facts and check their contextfor fact in model_xbrl.facts:concept_name = fact.concept.namecontext = fact.context# Define rules for specific concepts# Example: principalLossesAmount should ALWAYS be a durationif "PrincipalLossesAmount" in concept_name and not context.isDuration:insight = (f"Error: {concept_name} (Value: {fact.value}) "f"is tagged with an 'instant' context ({context.endDatetime}) ""but requires a 'duration'.")insights.append(insight)# Example: outstandingBalance should ALWAYS be an instantif "OutstandingBalance" in concept_name and not context.isInstant:insight = (f"Error: {concept_name} (Value: {fact.value}) "f"is tagged with a 'duration' context ""but requires an 'instant'.")insights.append(insight)cntlr.close()# 3. Insight: Report findingsreturn insights# --- Example Usage ---# Assume 'filing.htm' is a local iXBRL file from EDGAR# validation_results = validate_context_usage('path/to/your/filing.htm')# for result in validation_results:# print(result)

This code demonstrates a verifiable pipeline: it ingests a source filing, applies a specific transformation (context validation), and produces an explainable insight (a list of specific errors).

Implications for Modeling and Explainable AI

Fixing these XBRL pitfalls is not just a data-cleaning exercise; it is fundamental to building reliable models and explainable AI in finance. When data lineage is verifiable—meaning every number can be programmatically traced to its source filing and context—it creates a foundation of trust.

- Improved Risk Modeling: Accurate, time-series data on delinquencies and losses allows for more precise credit modeling without manual adjustments for data errors.

- Enhanced Surveillance: Automated systems can reliably ingest 10-D data to monitor covenant compliance and performance triggers without false alarms caused by tagging mistakes.

- Explainable LLM Reasoning: For Large Language Models (LLMs) used in finance, structured and validated data is critical. An LLM can reason about a deal's performance more accurately if it can ingest clean, context-rich data. This "model-in-context" approach, a core theme of CMD+RVL, ensures that AI-driven insights are grounded in verifiable facts rather than statistical hallucinations. An explainable pipeline makes the LLM’s output auditable back to the source filing.

How Dealcharts Helps

Avoiding these XBRL pitfalls is more than a compliance exercise; it's fundamental to building robust, explainable financial models. Dealcharts connects these datasets—filings, deals, shelves, tranches, and counterparties—so analysts can publish and share verified charts without rebuilding data pipelines. By providing pre-validated, linked data, Dealcharts operationalizes the principle of verifiable data lineage, allowing you to focus on analysis rather than remediation. Explore how you can transform your ABS analysis at Dealcharts.

Conclusion

Mastering the nuances of XBRL for ABS is crucial for anyone building programmatic financial tools. The common pitfalls discussed are not just technicalities; they are barriers to achieving the transparency and efficiency that structured data promises. By focusing on data lineage, implementing programmatic validation, and leveraging platforms that provide clean, interconnected data, analysts and developers can build more powerful, reliable, and explainable models. This aligns with the broader CMD+RVL framework of creating reproducible and context-aware financial analytics.

1. Incorrect Deal Structure Classification and Tagging

One of the most foundational and damaging XBRL for ABS — common pitfalls is the misclassification of the underlying deal structure. This error occurs when the filer selects an incorrect taxonomy schema or applies concepts from one asset class to another. It's the equivalent of building a financial model on the wrong template; every subsequent data point is misplaced, making the entire filing unreliable and difficult to parse programmatically.

This mistake propagates errors throughout the disclosure. If a residential mortgage-backed security (RMBS) is tagged using elements from the commercial real estate (CRE) taxonomy, crucial data points like FICO scores and loan-to-value ratios will be missing or incorrectly represented. The SEC’s validation rules may catch some blatant errors, but subtle misclassifications can slip through, leading to flawed data aggregation and analysis by investors and regulators.

Real-World Example

Consider an auto loan ABS where the preparer mistakenly uses XBRL tags from the credit card receivables taxonomy. Instead of tagging concepts like "Weighted Average Original Term of Pledged Receivables" (a key metric for auto loans), they might search for a similar-sounding but contextually incorrect tag from the credit card schema. This forces an illogical mapping, such as placing auto loan data into a field intended for "Principal Receivables," distorting the deal’s economic reality. This is particularly common in complex deals with multiple collateral types that are incorrectly simplified into a single-asset class filing.

Automated Checks and Recommended Fixes

To prevent this foundational error, a structured, multi-layered approach is essential. Automated systems can play a crucial role, but human oversight remains critical.

Automated Checks:

- Taxonomy Validation: Implement pre-filing checks that validate the selected taxonomy against the deal's CIK and the asset class described in the 424B5 prospectus.

- Element Usage Analysis: Use scripts to scan the XBRL instance document for element usage patterns. A filing tagged as "Auto Loan ABS" should not contain a high percentage of tags from the "Credit Card ABS" taxonomy. The Dealcharts API can be used to cross-reference CIKs with known deal structures to flag anomalies.

Best Practices and Fixes:

- Deal Documentation Review: The XBRL tagging process must begin with a thorough review of the prospectus supplement. The asset class, structural features, and key collateral characteristics should be documented before any tagging begins.

- Create a Validation Checklist: Develop an internal checklist that maps key sections of the prospectus (e.g., collateral summary) directly to the high-level XBRL taxonomy selection.

- Compliance Review: Mandate a review by a compliance or legal team member who understands the deal structure to sign off on the chosen taxonomy before detailed tagging is completed.

- Maintain Mapping Documentation: Create and preserve a clear mapping document that links each data point in the source report to its corresponding XBRL element, justifying the choice. This creates an auditable trail and ensures consistency for future filings.

2. Incomplete or Incorrect Pool-Level Loan Data Tagging

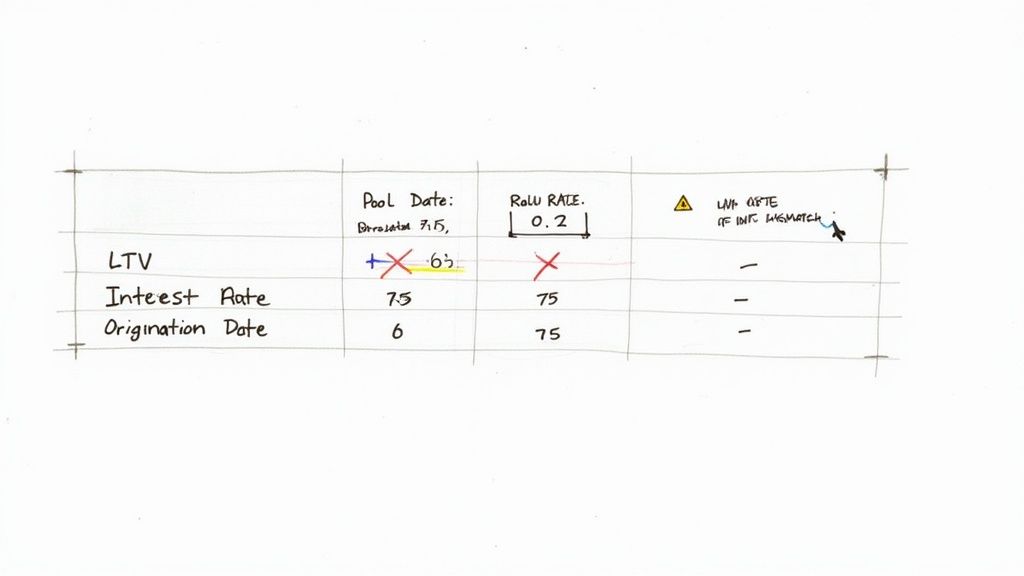

A critical and widespread issue among XBRL for ABS — common pitfalls involves errors in tagging pool-level loan attributes. This mistake occurs when granular data points like origination dates, loan-to-value (LTV) ratios, credit scores, or interest rates are omitted, tagged with incorrect units, or assigned the wrong data type. Such errors directly undermine the utility of the structured data, preventing investors and analysts from performing accurate collateral analysis and violating the core principles of Regulation AB's pool data requirements.

The impact of incorrect pool-level tagging is severe. If stratification tables are missing key credit quality buckets or if a significant portion of the loan pool has incomplete origination dates, it becomes impossible to accurately model prepayment speeds or default risk. These are not just formatting mistakes; they are substantive data integrity failures that can lead to flawed investment models and mispriced risk. The machine-readable format becomes a source of misinformation rather than a tool for transparency.

Real-World Example

Imagine a residential mortgage-backed security (RMBS) filing where LTV ratios are tagged as percentages instead of decimals. A loan with an 80% LTV is entered as the integer

instead of the required decimal format80

. An automated system ingesting this data would interpret the LTV as 8000%, a nonsensical value that would skew any weighted average calculation or risk assessment. Similarly, tagging an interest rate as0.80

when the taxonomy requires it in basis points (5.5

) can completely distort yield analysis. These unit and data type errors are common when data is manually transcribed without proper validation.550

Automated Checks and Recommended Fixes

Preventing these granular data errors requires a combination of automated validation rules and rigorous internal processes that begin at the data source.

Automated Checks:

- Data Type and Unit Validation: Implement scripts that check each tagged data point against its expected data type (e.g., decimal, integer, date) and unit of measure (e.g.,

,pure

, basis points) as defined in the ABS taxonomy.percent - Range and Reasonableness Rules: Create automated checks to flag values outside of logical ranges. For instance, a rule could flag any LTV value greater than

(150%) or a FICO score outside the1.5

range.300-850 - Statistical Outlier Detection: Run statistical analyses on tagged data sets to identify outliers that may indicate a unit error. A sudden spike in the weighted average interest rate for a pool could signal a decimal-versus-percentage mistake.

- Cross-Validation: Programmatically cross-validate summary statistics reported in the filing (e.g., total pool balance, weighted average coupon) against calculations derived from the tagged loan-level data to ensure consistency. You can review examples of correctly structured pool data, such as those found in this Carvana Auto Receivables Trust filing on Dealcharts.

Best Practices and Fixes:

- Establish a Unit Standard Guide: Before tagging begins, create a clear internal guide that specifies the required unit and format for key data points (LTVs, rates, scores) based on the SEC’s taxonomy.

- Automate Data Mapping: Whenever possible, map data directly from the source loan-level database to the XBRL instance. This minimizes manual entry errors and ensures data lineage.

- Implement Tiered Reviews: Institute a multi-stage review process. The first review should focus on data accuracy and completeness, while a second review by an XBRL expert should confirm correct tag application and formatting.

- Use XBRL GL: For sophisticated issuers, using XBRL Global Ledger (GL) can help capture transactional source data with proper data types and context from the outset, ensuring that information flows correctly into the final ABS filing.

3. Inadequate Bond/Tranche-Level Disclosure Tagging

A critical and surprisingly common pitfall is the failure to tag bond and tranche-level disclosures comprehensively. This error occurs when filers provide incomplete or inconsistent data for individual tranches within a deal, undermining the core purpose of structured data: enabling precise, class-by-class analysis. This is one of the most detrimental XBRL for ABS — common pitfalls because it directly obstructs an investor's ability to assess risk, subordination, and cash flow priority.

This oversight can render the XBRL filing almost useless for sophisticated modeling. Investors and analysts rely on accurately tagged data for each CUSIP, including its balance, coupon, rating, and place in the payment waterfall. When this information is missing for subordinate tranches or incorrectly reflects the capital structure, programmatic analysis becomes impossible, forcing a manual, error-prone reconciliation with the HTML prospectus.

Real-World Example

Imagine a CMBS deal where the filer meticulously tags the CUSIP, original balance, and credit rating for the senior AAA-rated tranches but provides incomplete data for the mezzanine and subordinate bonds. For instance, the interest rate type for a floating-rate junior tranche might be tagged as "Fixed" or left blank entirely. Furthermore, the payment priority sequence could be tagged incorrectly, showing a mezzanine tranche receiving principal payments before a more senior class under certain conditions, directly contradicting the offering documents. This misrepresentation of the deal's payment waterfall and credit enhancement makes automated risk and cash flow modeling dangerously inaccurate.

Automated Checks and Recommended Fixes

Preventing incomplete tranche-level tagging requires a systematic approach that reconciles the XBRL data with the source legal documents. Both automated validation and rigorous manual reviews are necessary to ensure accuracy.

Automated Checks:

- Tranche Count Verification: Implement a script that counts the number of unique tranches described in the prospectus's capital structure table and compares it to the number of tranche-level data groups tagged in the XBRL instance.

- CUSIP and Balance Reconciliation: Programmatically cross-reference the tagged CUSIPs and their original balances against a master list extracted from the offering documents to flag any discrepancies or omissions.

- Waterfall Logic Validation: While complex, rules-based checks can be developed to validate basic waterfall logic, such as ensuring that principal payments to subordinate classes do not precede those to senior classes in standard sequential-pay structures.

Best Practices and Fixes:

- Create a Master Tranche Schedule: Before tagging, create a definitive spreadsheet mapping every tranche (CUSIP) to all its key characteristics: original balance, coupon/spread, rating (including agency and date), subordination level, and credit enhancement percentage.

- Use Consistent Naming Conventions: Apply a consistent and logical naming or numbering system for each tranche throughout the XBRL document to avoid confusion and ensure data points are correctly associated with their respective bonds.

- Tag All Credit Enhancement Details: For each tranche, meticulously tag its subordination level and any other forms of credit enhancement. This data is fundamental to risk assessment. A detailed view of a correctly structured CMBS deal, like the BMARK 2024-V5 transaction, illustrates how this granular data should be organized.

- Verify Waterfall Sequence: Double-check that the tagged payment waterfall sequence matches the prospectus exactly. This is a high-risk area for error and requires careful review by someone with a deep understanding of the deal structure.

- Reconcile XBRL with HTML: Perform a final, side-by-side reconciliation of the data in the final HTML prospectus tables and the tagged values in the XBRL instance document before filing.

4. Improper Use of Continuation and Extension Contexts

A subtle yet critical pitfall in XBRL for ABS — common pitfalls is the improper use of contexts. XBRL contexts define the "who, what, and when" for a specific data point, specifying the entity, reporting period (instant or duration), and any unique scenarios. Misusing these contexts breaks the logical connection between the data and its meaning, rendering analytics useless and often causing SEC validation failures.

This error commonly occurs when filers confuse "instant" contexts, which represent a single point in time (e.g., the pool balance as of the end of the month), with "duration" contexts, which cover a period (e.g., total losses incurred during the month). Another frequent mistake is applying a single, generic context across different series in a multi-series securitization, which incorrectly merges their distinct performance data. Such errors completely undermine the structural integrity of the reported data.

Real-World Example

Imagine a servicer's report for a credit card ABS filing. The report states the total amount of charge-offs for the monthly collection period was $1.5 million. The preparer, rushing to complete the filing, mistakenly tags this value using an "instant" context defined for the period-end date, such as

. The filing now incorrectly asserts that charge-offs were $1.5 million precisely at the stroke of midnight on September 30th, rather than over the entire month. This makes it impossible to calculate key performance metrics like the annualized charge-off rate and breaks time-series analysis for investors relying on the data.AsOf_Sep30_2023

Automated Checks and Recommended Fixes

Preventing context errors requires a systematic approach to defining and applying them, supported by automated validation and clear internal documentation.

Automated Checks:

- Context Type Validation: Implement rules to check that specific XBRL concepts are paired with the correct context type. For instance, a script can flag any instance where the concept

(a duration-based metric) is associated with an instant context.abs:PrincipalLossesIncurredAmount - Date Logic Verification: Use validation tools to check for logical consistency in context dates. A duration context's end date must always be after its start date. The Dealcharts API can be used to pull prior period dates for a CIK to ensure new filing contexts follow a logical sequence.

Best Practices and Fixes:

- Establish a Context Naming Convention: Create a clear, standardized naming system for all contexts (e.g.,

for durations,Dur_Jan01_2024_To_Jan31_2024

for instants). This reduces ambiguity and speeds up the review process.AsOf_Jan31_2024 - Use Context Templates: For recurring filings like 10-Ds, create and reuse context templates. This ensures that standard periods are defined consistently from one filing to the next, minimizing the risk of manual entry errors.

- Validate Context Application: Utilize XBRL viewing tools to visually inspect the instance document before filing. This allows preparers to confirm that data points like beginning balances, period activity, and ending balances are correctly assigned to their respective contexts.

- Document All Custom Contexts: If using extension contexts for non-standard scenarios (e.g., stress tests, different reporting tranches), maintain separate documentation that clearly defines the purpose and scope of each one. This provides an audit trail and ensures clarity for data users.

5. Missing or Incorrect Extension Taxonomy Elements

A critical yet often mishandled aspect of XBRL for ABS filings is the use of extension taxonomies. Extensions are necessary when the standard SEC taxonomy lacks an element to represent a unique, material characteristic of a deal. One of the most significant XBRL for ABS — common pitfalls is either failing to create a necessary extension or creating one improperly, which defeats the purpose of structured data by obscuring vital, deal-specific information.

This error leads to a direct loss of data fidelity. If a custom credit enhancement mechanism or a unique performance trigger is not defined as an extension, that information becomes invisible to programmatic analysis. Conversely, creating extensions with generic names like "OtherFactor," incorrect data types, or missing definitions renders the data useless for comparison and can trigger SEC validation failures, delaying the filing process.

Real-World Example

Imagine a synthetic securitization where risk is transferred via credit default swaps rather than a true sale of assets. The standard ABS taxonomies do not have specific elements to capture the nuances of synthetic collateral or counterparty risk metrics. A preparer might be tempted to force this data into a vaguely related standard element or, worse, omit it entirely from the XBRL filing.

The correct approach is to create extension elements like "NotionalAmountOfCreditDefaultSwaps" or "CounterpartyCreditRating." However, if these are created incorrectly, for instance, by defining the notional amount as a percentage (

) instead of a monetary value (perCentItemType

), the tagged data becomes fundamentally flawed. This not only misrepresents the deal's structure but also breaks any automated models attempting to ingest and calculate the issuer's true risk exposure.monetaryItemType

Automated Checks and Recommended Fixes

Preventing extension-related errors requires a disciplined process that prioritizes standardization before customization. Both automated validation and rigorous internal governance are key.

Automated Checks:

- Extension Element Analysis: Implement a script that flags all extension elements in an XBRL instance. The script should check for adherence to naming conventions and ensure that each extension has a non-generic, comprehensive label and definition.

- Data Type Validation: Before submission, run a validation check that verifies the

and data type of each extension against expected values. For example, any element containing "Percentage" in its name should likely use axbrli:unit

orpure

.perCentItemType

Best Practices and Fixes:

- Exhaust Standard Taxonomy First: Before creating any extension, thoroughly search the entire standard ABS taxonomy to ensure a suitable element does not already exist. This prevents unnecessary and non-standard data points.

- Document Justification: Maintain a log that documents the business justification for every single extension element created. This record should explain why no standard element was sufficient.

- Adopt a Naming Convention: Use clear, descriptive "CamelCase" names for extensions that precisely describe the concept (e.g.,

).WeightedAverageLifeOfFloatingRateTranche - Provide Comprehensive Documentation: Every extension must have a complete set of labels (standard, terse, documentation) that clearly define the business meaning of the element, how it is calculated, and its purpose within the deal structure.

- Link to Standard Concepts: Where possible, use XBRL relationship links (e.g.,

) to anchor the extension element to the closest concept in the standard taxonomy, providing context for data consumers.general-special

6. Performance Data and Historical Seasoning Information Errors

Among the most critical XBRL for ABS — common pitfalls are errors in tagging performance and seasoning data. Metrics like delinquencies, losses, and prepayments are the lifeblood of investor analysis, and mistakes here directly distort credit models and risk assessments. This pitfall occurs when preparers miscalculate metrics, use inconsistent definitions, or incorrectly tag historical data, rendering period-over-period trend analysis useless.

These errors undermine the very purpose of standardized reporting by injecting noise into what should be clean, comparable data. For instance, if cumulative loss rates are restated in a servicer report but the corresponding XBRL filings from prior periods are not corrected, a permanent inconsistency is created in the public data record. This forces analysts to manually reconcile discrepancies, defeating the efficiency gains XBRL is meant to provide.

Real-World Example

Consider a servicer for a CMBS deal who reports monthly performance. In one period, they tag the "Amount of 60-89 Days Delinquent Mortgage Loans" correctly. In the next period, a new preparer inadvertently aggregates the 30-59 day delinquencies into the same XBRL tag, inflating the reported number for the 60+ day bucket. Programmatic analysis would flag this as a sudden, dramatic spike in serious delinquencies, potentially triggering erroneous credit alerts or flawed model outputs. Another common error is calculating pool seasoning from the deal's cut-off date instead of from the individual loan origination dates, understating the true age and expected performance of the collateral.

Automated Checks and Recommended Fixes

Robust validation logic and strict adherence to internal documentation are paramount for ensuring the accuracy of performance data. This requires both automated checks and rigorous manual oversight.

Automated Checks:

- Reasonableness Rules: Implement validation rules that check if performance rates are within logical bounds. For example, a script can flag any delinquency, loss, or prepayment rate that exceeds historical highs for that asset class or unexpectedly deviates from the previous period's report by a significant margin.

- Inter-Period Consistency: Write scripts to compare key cumulative metrics (like cumulative net losses) from the current filing against the prior period's filing. The current value should be greater than or equal to the prior value; any decrease signals a potential restatement or error that requires investigation.

- Component Summation: Automatically validate that the sum of delinquency buckets (e.g., 30-59, 60-89, 90+ days) equals the "Total Delinquent" tag. This simple check catches common aggregation errors.

Best Practices and Fixes:

- Standardized Metric Definitions: Create and maintain a master document that clearly defines every performance metric (e.g., "Annualized Net Loss," "Constant Prepayment Rate") in alignment with the prospectus. This document should be the single source of truth for all preparers.

- Maintain a Reporting Template: Develop a monthly or quarterly performance reporting template that maps directly from servicer report line items to specific XBRL elements. This ensures consistency across all reporting periods.

- Document Calculation Methodologies: Explicitly document the methodology for complex calculations like seasoning and weighted averages. This documentation should be reviewed and approved before implementation.

- Reconcile Against Source Reports: Mandate a formal reconciliation process where every XBRL-tagged performance number is checked against the original servicer or trustee report before filing. For deeper analysis, you can see how CMBS vintage performance data is tracked and compared.

7. Inadequate Control Testing and Validation Before Filing Submission

One of the most preventable yet persistent XBRL for ABS — common pitfalls is the failure to implement rigorous control testing and validation before submission. This oversight occurs when filing teams treat XBRL as a last-minute formatting step rather than an integral part of the financial reporting process. Skipping crucial quality assurance checks, such as data reconciliation and validator tool runs, allows errors to pass undetected into the final EDGAR submission, undermining data integrity and creating systemic issues across subsequent filings.

This procedural gap is akin to publishing a financial statement without an audit. It bypasses the checks and balances designed to ensure accuracy, consistency, and compliance. Even minor discrepancies, like pool statistics in the XBRL that do not foot to summary tables in the HTML prospectus, can erode investor confidence and trigger regulatory scrutiny. Without a formal validation process, these errors are not only missed but often become institutionalized in templates for future deals.

Real-World Example

Imagine an ABS deal where the underlying servicer report provides updated performance data, and the legal team simultaneously makes a minor amendment to the prospectus. The XBRL preparer, working against a tight deadline, updates the XBRL based on the servicer report but misses the prospectus change. The filing is submitted without a three-way reconciliation between the servicer data, the final prospectus, and the XBRL instance. As a result, performance metrics like delinquency rates or prepayment speeds are misaligned between the human-readable prospectus and the machine-readable XBRL data. This disconnect renders the XBRL filing unreliable for any programmatic analysis or surveillance.

Automated Checks and Recommended Fixes

A disciplined, multi-stage validation framework is non-negotiable for producing reliable XBRL filings. Combining automated tools with manual review processes creates a robust defense against common errors.

Automated Checks:

- Pre-Filing Validation: Always run the filing through the official SEC EDGAR Filer Manual validation tool. Address all reported errors and thoroughly investigate every warning, as warnings often flag substantive data quality issues.

- Three-Way Reconciliation Scripts: Develop scripts that programmatically extract key data points from the source servicer reports, the parsed HTML/text prospectus, and the XBRL instance. The script can then flag any numerical or categorical mismatches automatically, highlighting discrepancies that require manual investigation.

Best Practices and Fixes:

- Develop a Control Checklist: Create a comprehensive pre-filing checklist that covers critical validation steps, including taxonomy selection, context period accuracy, data reconciliation, and sign-offs.

- Mandate Three-Way Reconciliation: Implement a formal policy requiring a three-way tie-out between the source data (e.g., trustee/servicer reports), the final legal disclosure document (prospectus), and the XBRL filing.

- Establish Peer Review: Require a "four-eyes" review process where a second qualified team member reviews and signs off on high-risk tagging decisions and calculations before submission.

- Document All Testing: Maintain an auditable trail of all validation steps performed, including checklists, reconciliation proofs, and records of cleared validation errors. This documentation is crucial for internal audits and regulatory inquiries.

Article created using Outrank