Context Engine for Quantitative Research

How a Context Engine for Quantitative Research Transforms Data into Verifiable Insight

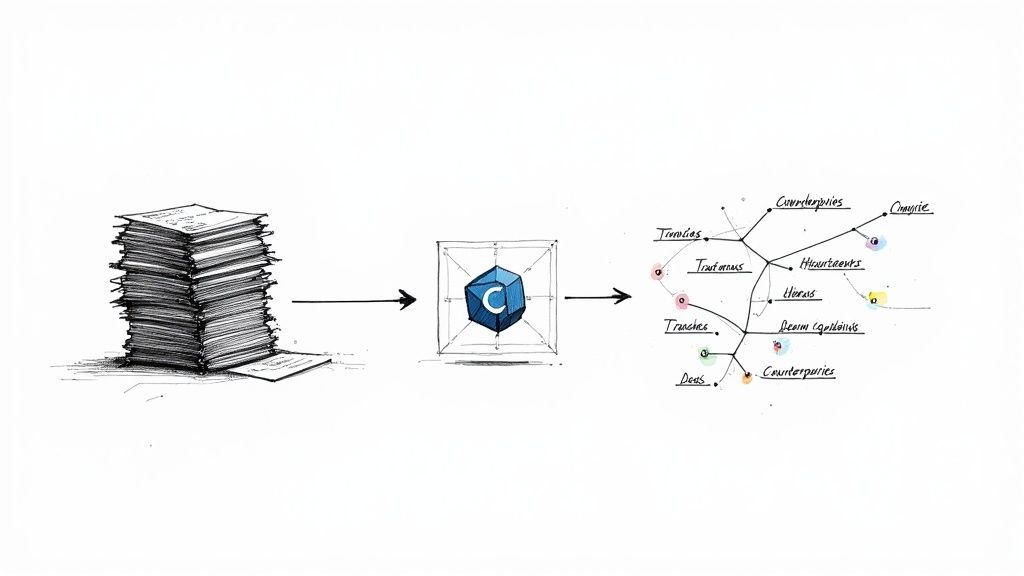

What is a context engine for quantitative research? It's a system designed to transform disconnected data points from sources like EDGAR filings, servicer reports, and loan tapes into a structured, machine-readable knowledge graph. Instead of just storing data, a context engine maps the complex relationships between financial entities—issuers, deals, servicers, and assets. The goal is to build a verifiable, auditable foundation for quantitative modeling, risk monitoring, and programmatic analysis. In structured finance, where understanding the full narrative behind a security is critical, this capability moves teams from surface-level data aggregation to deep, explainable insight. Dealcharts helps visualize this structured data, allowing analysts to cite and share verified findings.

The Strategic Need for Context in Capital Markets

Quantitative teams are drowning in unstructured data from disparate sources. Models excel at processing numbers but miss the narrative context hidden in legal filings, deal documents, and counterparty networks. This gap between raw data and verifiable intelligence is where critical risks and opportunities are missed. The problem isn't a lack of information; it's a lack of verified, structured connections. An analyst might have thousands of SEC filings and servicer reports, but linking them to answer a query like, "Which CMBS deals share a special servicer that has previously handled assets in flood-prone zones?" requires hours of manual, error-prone work.

A context engine automates this discovery by acting as intelligent connective tissue. By parsing documents to identify entities and their roles (e.g., issuer, trustee, servicer), it builds a coherent map of the structured finance ecosystem. This approach resolves persistent challenges for quant teams:

- Data Silos: It breaks down barriers between datasets, linking a CUSIP from a remittance report to its originating prospectus and all involved counterparties.

- Manual Overhead: It automates the extraction of key facts from dense documents, freeing analysts for higher-value analysis.

- Lack of Verifiability: Every fact in the knowledge graph is traced directly back to its source document, creating an immutable data lineage and audit trail.

A context engine provides the "why" behind the "what." It doesn't just report a loan's delinquency status; it links to the servicer's commentary, traces that servicer's performance across other deals, and maps the underlying property's location against macroeconomic data.

The Data and Technical Architecture of a Context Engine

To understand what a context engine for quantitative research does, you have to look at its architecture. This isn't a single piece of software but an interconnected system designed to turn raw information from sources like EDGAR 10-D filings, 424B5 prospectuses, and loan-level tapes into queryable intelligence. The engine is built on several core technical pillars.

The Knowledge Graph Backbone

The core of any context engine is its knowledge graph. This is not a static table but a dynamic network map of the structured finance universe. Every entity—a deal, company, tranche, or person—is a "node." The relationships between them, such as "is the servicer for" or "issued by," are "edges" connecting the nodes. This structure enables powerful queries that are impossible with traditional relational databases, allowing analysts to traverse the graph to uncover non-obvious connections. You can see these relationships by exploring the network of deals from an issuer like the J.P. Morgan CMBS shelf.

The Provenance and Lineage Layer

If the knowledge graph is the brain, the provenance layer is its conscience. This component addresses a critical question: "Where did this fact come from?" For every data point, the provenance layer maintains an unbreakable link back to its source document, often down to the page and paragraph. This creates an unimpeachable audit trail. When a model produces a result, analysts can instantly cite the filing or report that validates it. This commitment to data lineage is non-negotiable for regulated markets and enables reproducible, defensible analytics.

APIs and LLM-Ready Outputs

The engine must communicate with external systems. This is achieved through two primary channels:

- APIs (Application Programming Interfaces): These allow developers to programmatically query the knowledge graph, pulling data directly into proprietary models, dashboards, and internal applications.

- LLM-Ready Outputs: As Large Language Models (LLMs) become central to analysis, the context engine is designed to feed them structured, citation-backed data. This grounds AI-driven reasoning in verifiable financial fact, not web-based hallucinations.

A Real-World CMBS Analysis Workflow

Let's walk through a common task for a structured finance analyst: assessing risk in a Commercial Mortgage-Backed Security (CMBS) deal. The traditional method involves a manual hunt for documents and data points across disparate systems. A context engine automates this entire discovery process into a single, verifiable, programmatic query.

Imagine an analyst starts with a CUSIP. The engine initiates a chain of automated operations:

- Entity Resolution: It instantly identifies the security and links it to the parent CMBS deal.

- Document Retrieval: It programmatically retrieves all related 10-D filings and servicer reports from sources like EDGAR.

- Information Extraction: The engine parses these documents, extracting structured data (loan balances, delinquency status) and unstructured text (servicer commentary).

- Relationship Mapping: It links loans to their underlying properties and connects those properties to relevant geographic and economic data.

What once took hours of manual work is now executed in seconds.

Example Code Snippet

This programmatic workflow can be executed with a simple Python script that interfaces with the context engine's API, automating research from start to finish. The data lineage is explicit: source document -> extracted fact -> analytical insight.

# A simplified workflow to retrieve context for a CMBS deal# using a hypothetical context engine APIimport context_engine_api as ce# Start with a known CUSIP for a specific deal tranchetranche_cusip = "05537BAA7"# 1. Query the engine to get the parent deal and linked 10-D filings# Data lineage: CUSIP -> Deal -> Filings (Source: EDGAR)deal_filings = ce.get_linked_filings(cusip=tranche_cusip)# 2. Extract servicer comments from the latest filing# Data lineage: Filing -> Servicer Comments (Source: 10-D Exhibit)latest_filing_id = deal_filings[0]['id']servicer_comments = ce.extract_servicer_comments(filing_id=latest_filing_id)# 3. Identify properties with negative sentiment in comments# Transformation: Apply NLP model to unstructured texttroubled_properties = ce.analyze_sentiment(comments=servicer_comments)# 4. Pull regional economic data for those properties# Data lineage: Property Address -> Zip Code -> Economic Data (Source: 3rd party)for prop in troubled_properties:prop['economic_data'] = ce.get_regional_data(zip_code=prop['zip_code'])# Output the enriched, context-aware results for further modeling# Insight: Properties with negative servicer commentary correlated with local economic indicatorsprint(troubled_properties)

This snippet demonstrates how a complex research task becomes a logical chain of API calls. The analyst receives not just a data dump, but a verifiable trail of information. This explainability is key—every data point can be traced back to its source, enabling analysts to build models on a foundation of trust. An analyst could start with a high-level view on the BMARK 2025-V14 deal page and use such a workflow to dive into loan-level specifics.

Implications for Modeling and AI

The primary benefit of a context engine for quantitative research is superior analytical outcomes. It enhances both traditional quantitative models and modern AI systems by enabling a "model-in-context" approach.

For statistical models, the engine allows for powerful feature engineering. Analysts can move beyond standard financial metrics and create features based on the market's interconnected structure. A credit risk model could incorporate features derived from the sentiment of servicer commentary, the network centrality of a deal's legal counsel, or a trustee's historical performance.

The impact on Large Language Models (LLMs) is even more critical. LLMs are prone to "hallucination"—fabricating information when uncertain. In finance, this is an unacceptable risk. A context engine acts as a "source of truth" by providing the LLM with a clean, structured stream of verifiable data. This process, known as Retrieval-Augmented Generation (RAG), transforms an LLM from an unreliable assistant into a trustworthy analytical partner. It forces the AI to reason over a foundation of auditable financial facts, enabling analysts to ask complex questions in natural language and receive answers fully traceable to source documents.

How Dealcharts Helps

Dealcharts connects these datasets—filings, deals, shelves, tranches, and counterparties—so analysts can publish and share verified charts without rebuilding data pipelines. It serves as the application layer for the context engine, transforming structured data into shareable, citation-backed visualizations. This allows teams to shift focus from data plumbing to high-value analysis and communication, with full confidence in the underlying data lineage. Analysts can explore macro trends, such as the entire 2023 CMBS vintage, then drill down into specific deals with a clear, verifiable context.

Conclusion

The purpose of building a context engine for quantitative research is to merge deep data context with verifiable explainability. This marks a fundamental shift away from siloed datasets and opaque "black box" models toward an interconnected, transparent analytical ecosystem. By automating the laborious work of data discovery and linkage, these engines empower analysts to produce higher-quality, more accurate, and fully reproducible work. This approach establishes a new standard for rigor in quantitative finance, ensuring that every insight is built on a foundation of auditable fact—a core principle of the broader CMD+RVL framework for reproducible finance.

Article created using Outrank